In this post, we deep dive into Zanzibar’s architecture and implementation. We will uncover how Google achieves high-performance authorization checks at a global scale. From ACL servers to Leopard Indexing, discover the innovative techniques powering this system.

To understand the design principles of Zanzibar read the part 1 of this article.

- Architecture and Implementation

- References

Architecture and Implementation Link to heading

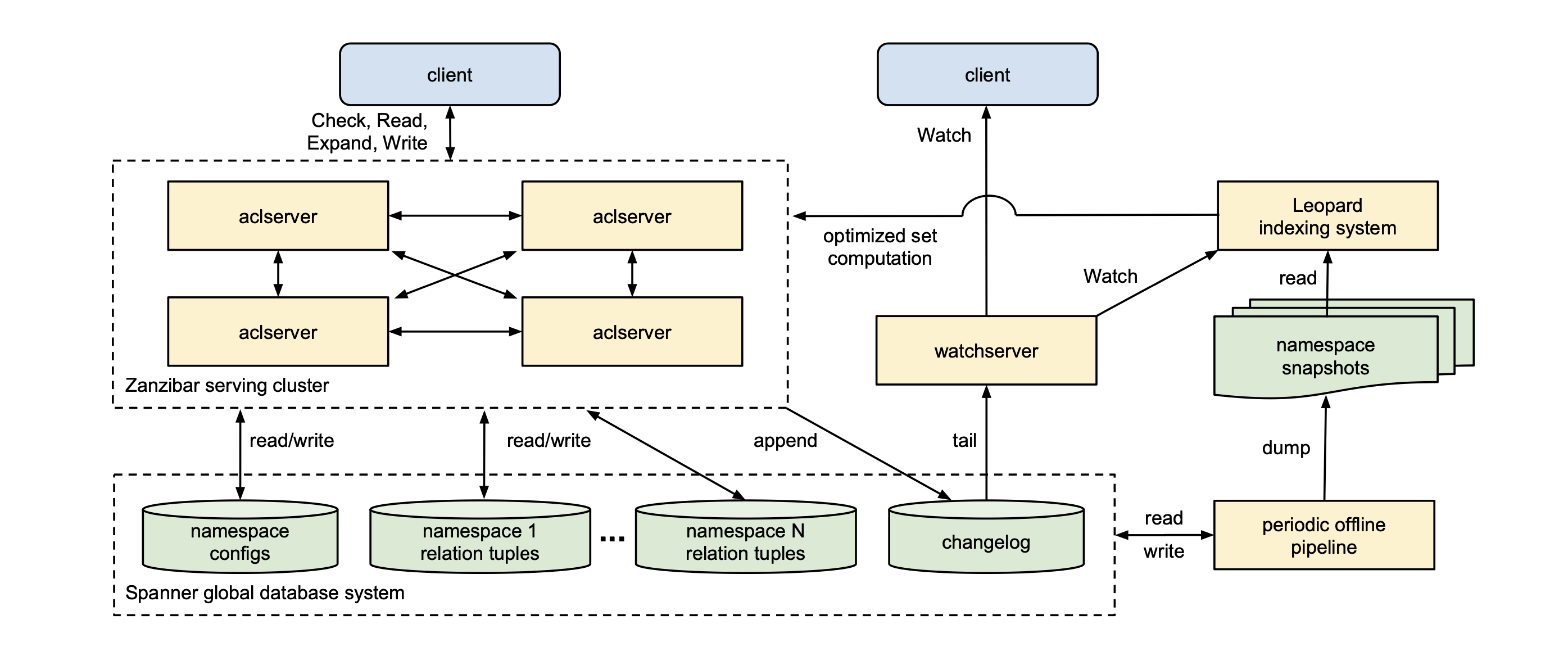

Architecture of Zanzibar

ACL Servers Link to heading

ACL servers are the main server types organized in clusters to handle Read, Write, Check, and Expand requests. Requests in each cluster are fanned out to other servers as necessary to compute the response.

Watch Servers Link to heading

The watch server cluster is a separate cluster to handle watch requests. These servers communicate with the changelog database to serve a stream of namespace changes to clients in near real-time.

Databases Link to heading

Zanzibar setup in Google uses Spanner databases to store ACLs and their metadata. Relation tuples for each client namespace are stored in one database and another database to store the changelog. Every Zanzibar write is committed to the tuple storage and the changelog shard in a single transaction.

Zanzibar periodically runs data pipelines to perform different offline functions in Spanner; for example, one function takes dumps of relational tuples in each namespace at known snapshot timestamps and another function for garbage collection of old tuple versions than a configured threshold per namespace.

Relation Tuple storage Link to heading

- The primary key (shard ID, object ID, relation, user, commit timestamp) uniquely identifies each row.

- Multiple tuple versions are stored in different rows so checks and reads can be evaluated for any given timestamp.

- Clients configure the sharding of the databases using the

shard ID, which is generally determined byobject ID. - When the namespace holds too many objects, the sharding can be done by a combination of

object IDanduser.

Changelog storage Link to heading

- The watch server clusters use the change log storage to tail the change log on watch requests.

- The primary keys are (changelog shard ID, timestamp, unique update ID). (Changelog shard ID is randomly selected per read.)

- In Google, Spanner is given the changelog shard, which acts as a transaction coordinator to minimize blocking changelog reads on pending transactions.

Namespace configuration storage Link to heading

- Namespace configuration, i.e., the namespace metadata is stored in a database with two tables, one for configuration, which is keyed by

namespace ID, while the other stores the metadata changelog, which is keyed by commit timestamp. - This allows the Zanzibar server to load all configurations upon startup and continuously monitor the changelog to refresh the configuration.

Request Handling Link to heading

Evaluation Timestamp Link to heading

Zanzibar APIs support sending an optional zookie in the request with the encoded timestamp to process the ACL request. When this zookie is not provided, Zanzibar uses a default staleness to ensure all transactions are evaluated at a timestamp that is as recent as possible.

Since all ACL policies can be randomly sharded, the shards can be located in a different zone than the ACL servers, which can incur latency. To avoid such out-of-zone reads for data at default staleness, each ACL server tracks the frequency of each zone read at the current default staleness timestamp and uses these frequencies to compute a binomial proportion confidence interval of the probability that any piece of data is available locally at each staleness. Upon collecting enough data, the server checks to see if each staleness value has a sufficiently low chance of incurring out-of-zone. If no known staleness values are safe, then Zanzibar uses a two proportion z-test to see if increasing the staleness value would significantly reduce the out-of-zone read probability then the default staleness value is increased to improve the latency.

It should be noted that default staleness value adjustment is only a performance improvement and does not affect Zanzibar’s consistency.

Configuration Consistency Link to heading

Changes to a namespace configuration can change ACL evaluations; therefore, the correctness of the namespace configuration can affect Zanzibar’s consistency.

To maintain the correctness of the Zanzibar, choose a single snapshot timestamp for namespace configuration metadata when evaluating each request. All ACL servers in the cluster use that same timestamp for the same request, including any subrequests that fan out of the original client request.

A monitoring job tracks the timestamp range available to every server to ensure that all ACL servers in a cluster use the correct timestamp snapshot. It aggregates them, reporting a globally available range to every other server. The server picks a time from this range on each incoming request, ensuring that all servers can continue serving even if they can no longer read from the config storage.

Check Evaluation Link to heading

Zanzibar check evaluation checks if a user, U, is allowed in the relation recursively. It can be imagined as if a user has access to the content and then returns the ACL policy; otherwise, check if the parent user (or group) has access to the content, then return the ACL policy. This recursive operation is called “pointer chasing”.

All leaf nodes of the boolean expression tree are evaluated to minimize the check latency of this recursive operation. When the outcome of one node determines the result of the subtree, the evaluation of the other nodes in the subtree is canceled. (This has a side effect of causing a “cache stampede,” which is explained in the caching details in how the hotspots are handled.)

Leopard Indexing Link to heading

Maintaining low latency in a recursive “pointer chasing” operation can be complex with deeply nested with many child groups. To solve this problem, Zanzibar handles checks using Leopard Indexing, a specialized index that supports efficient set computations using a skip list.

To index and evaluate group membership, Zanzibar represents group membership with two set types:

- GROUP2GROUP(s) -> {e}, where

srepresents an ancestor group anderepresents a descendent group that is directly or indirectly a sub-group of the ancestor group. - MEMBER2GROUP(s) -> {e}, where

srepresents an individual user anderepresents a parent group in which the user is a direct member.1

To evaluate whether user U is a member of group G, we can use the following expression:

$$ (\text{MEMBER2GROUP(U)} \cap \text{GROUP2GROUP(G)}) \neq \emptyset $$

Group membership can be considered a reachability problem in a graph, where nodes represent groups and users and edges represent direct membership. Flattening group-to-group paths allows reachability to be efficiently evaluated by Leopard, though other types of denormalization can also be applied as data patterns demand. (This is one of the cases where Zanzibar uses a denormalized dataset.)

The Leopard Indexing system consists of three parts:

- A serving system capable of consistent low latency operations across sets.

- An offline periodic index-building system.

- An online real-time layer capable of continuously updating the serving system as the tuple changes occur.

As noted above, index tuples are stored as an ordered list of integers in a structure like a skip list to allow efficient union and intersection among sets. For example, evaluating the intersection between two sets, A and B, requires only O(min(|A|,|B|)) skip-list seeks.

Note: SpiceDB, an open-source permissions database for Zanzibar by authzed has an open proposal to use something called Tiger cache which can potentially complement the Leopard indexing system to make a Zanzibar system more performant.

The offline index builder generates index shards from a snapshot of Zanzibar relation tuples and configurations and replicates shards globally. The index builder respects userset rewrite rules and recursively expands edges in an ACL graph to form Leopard index tuples.

To maintain the incremental layer, the Leopard incremental indexer calls Zanzibar’s Watch API to receive a temporally ordered stream of Zanzibar tuple modifications and transforms the updates into a temporally ordered stream of Leopard tuple additions, updates, and deletions.1

In practice, a single Zanzibar tuple addition or deletion may yield tens of thousands of discrete Leop- ard tuple events. Each Leopard serving instance receives the complete stream of these Zanzibar tuple changes through the Watch API. The Leopard serving system is designed to continuously ingest this stream and update its various posting lists with minimal impact on query serving1.

Handling hotspots Link to heading

When Zanzibar receives a read/expand request, it can fan out over multiple servers and have many common groups and indirect ACLs. To facilitate consistency, Zanzibar stores data in a normalized way (apart from the case described in Leopard Indexing). Hotspots on common groups can arise when the data is normalized, overloading the database. Handling these hotspots is critical to scale the Zanzibar system.

Each ACL server cluster in Zanzibar has a distributed cache used for both read and check evaluations. The cache entries are distributed across these servers using consistent hashing so that recursive pointer chasing does not fan out to many ACL servers.

Since a namespace configuration can result in forwarding a request to evaluate indirect ACLs, a forwarding key is evaluated with {object#relation} values and is cached at the caller and the callee servers. This reduces the number of internal RPCs happening within the ACL server cluster.

With distributed caching, Zanzibar can potentially fall victim to the “cache stampede” problem, where multiple requests are received on a server after the cache invalidation, which can cause race to database calls and cache repopulation. To avoid this, Zanzibar maintains a lock table on each server to track the outstanding read and check requests. Only one request will begin processing among requests sharing the same cache key, while the rest are blocked until the cache is repopulated.

Distributed caches and lock tables handle a vast majority of hotspots. There are two additional improvements made to Zanzibar to improve the handling of hotspots further:

- Occasionally, a popular object invites many concurrent checks for different users, causing a hot spot on the storage server hosting relation tuples for the object 1. To avoid these hotspots, all relational tuples are read and cached in the distributed cache, a tradeoff for read bandwidth with caching ability. Hot objects are dynamically detected to apply this caching technique by tracking the outstanding reads on each object.

- Indirect ACL checks are frequently canceled when the result of the parent ACL check is determined (as discussed in Cache Evaluation), which can leave the cache key unpopulated. At the same time, this eager cancellation improves performance, but it negatively impacts caching and can even reduce caching performance by either causing a cache stampede or read operations getting stuck on lock tables. As a workaround, the eager cancellation of check evaluations is delayed when requests are waiting on lock table reads.

Performance Isolation Link to heading

In a distributed system like Zanzibar, performance isolation is critical to preventing a slow ACL server and reducing the system’s latency. To isolate performance, the following practices have to be followed:

- Ensure proper CPU capacity is allocated to each ACL server. To monitor the CPU capacity, RPC execution is measured in CPU seconds, which is a hardware-agnostic metric. Each Zanzibar client has a global limit on CPU usage per second, and RPCs are throttled if the CPU limit is exceeded and there are no spare CPU cycles available to the overall system.

- Zanzibar also limits the number of RPC calls to contain the system’s memory usage. The number of outstanding RPCs is also limited to improve memory.

- Zanzibar limits the maximum number of concurrent reads per object and/or client on each database server, ensuring no single object and/or client monopolizes a database server.

- Different lock table keys are used for different requests to prevent any throttling that the database applies to one client from affecting the other.

Tail Latency Mitigation Link to heading

Tail latency is the small percentage of response times from a system, out of all of the responses to the input/output (I/O) requests it serves, that takes the longest compared to the bulk of its response times.2

To avoid tail latency, Zanzibar uses request hedging, a gRPC retry policy to send the same requests requests to multiple servers. When a server receives a response for one of the requests, the other request is canceled.

In Zanzibar, a request is placed to at least two replicas of the backend services (ACL servers, database servers) in every geographical region where the backend servers are present. To avoid unnecessary multiplying load, the second request is only sent once it is established that the first request is slower than the Nth percentile of the request, which Zanzibar dynamically calculates.

Effective request hedging requires the requests to have similar costs. As we have seen, some authorization checks in Zanzibar can have indirect ACL checks, which can be time-consuming. For such check requests, request hedging can increase the system’s latency. To mitigate this, requests are not hedged to other Zanzibar servers; request hedging is only done for requests to Leopard or Database servers.

Conclusion Link to heading

In conclusion, Google’s Zanzibar demonstrates how global, consistent, and high-performance authorization systems can be implemented at scale, paving the way for more secure and efficient access control in modern applications.

Key takeaways from our exploration of Zanzibar include:

Scalability and Consistency: Zanzibar’s use of globally distributed databases, particularly Spanner, allows it to maintain consistency across billions of objects and users while providing low-latency responses.

Flexible Data Modeling: The system’s relational tuple model and namespace configurations offer great flexibility in defining and managing access control policies.

Performance Optimization: Techniques like Leopard Indexing, distributed caching, and request hedging demonstrate Zanzibar’s sophisticated approach to maintaining high performance under varying loads.

Hotspot Management: Zanzibar’s strategies for handling hotspots, including distributed caching and lock tables, showcase its robustness in real-world, high-demand scenarios.

Tail Latency Mitigation: The system’s approach to reducing tail latency through careful request hedging reflects a deep understanding of the challenges in distributed systems.

As authorization becomes increasingly complex in our interconnected digital world, systems like Zanzibar pave the way for more secure, efficient, and scalable access control. While Zanzibar is a proprietary Google system, its principles and techniques offer valuable insights for developers and architects working on large-scale authorization problems.

The evolution of such systems will likely continue, with potential improvements in areas like machine learning for adaptive access control, enhanced privacy features, and even more sophisticated caching and indexing techniques. As we move forward, the principles underlying Zanzibar will undoubtedly influence the next generation of authorization systems, contributing to a more secure and efficiently managed digital ecosystem.

Implementation examples Link to heading

After going through this I would highly recommend going through some tools that impliment the concepts of Zanzibar.

Authzed Link to heading

Authzed provides an entrprise authorization tool using a proprietary spiceDB which provides a highly efficient backend for the authorization system. Read Authzed documentation for more details.

Warrant.dev Link to heading

Warrant.dev is inspired by zanzibar which provides a highly scalable and finr grained authorization service for defining, storing, querying, checking, and auditing application authorization models and access rules 3. Warrant also provides differnt sdks and frontends to setup the authorization system yourself.

Keto Link to heading

Open Source (Go) implementation of “Zanzibar: Google’s Consistent, Global Authorization System”. Ships gRPC, REST APIs, newSQL, and an easy and granular permission language. Supports ACL, RBAC, and other access models 4.